Object Store

Resource Overview

An Object Store provisions an Amazon S3 bucket. Amazon Simple Storage Service (S3) provides users with scalable object storage in the cloud and a simple way to retrieve your data from anywhere on the web. An object store like an Amazon S3 bucket makes it possible for you to store limitless amounts of your data and addresses any concerns regarding its growth. S3 buckets contain objects that hold the data itself, any optional metadata you choose to provide, and a unique identifier to assist with faster data retrieval.

Key Features of Amazon S3 include:

- High durability, availability, and scalability of your data

- Support for security standards and compliance requirements for data regulation

- A range of options to transfer data to and from your object store quickly

- Supports popular disaster recovery architectures for data protection

Stackery automatically assigns your Object Store with a globally unique name that allows it to be referenced by a Function or Docker Task. These resources adopt AWS permissions necessary to handle the creation, reading, updating, and deletion of your data in the Object Store.

Event Subscription

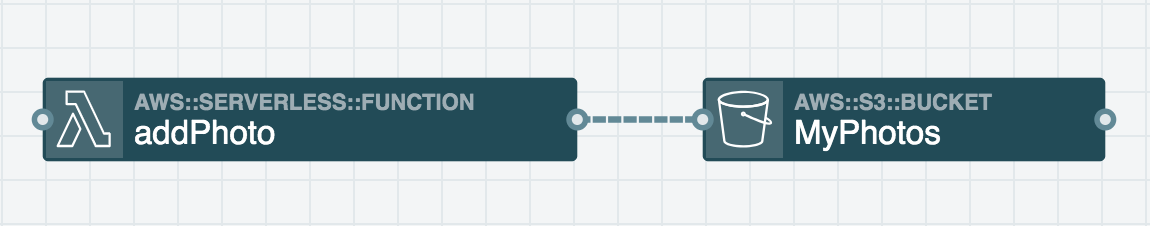

Event subscription wires (solid line) visualize and configure event subscription integrations between two resources.

The following resources can be subscribed to a Object Store:

- CDN (Content Delivery Network)

- Function

Service Discovery

Service discovery wires (dashed line) provide compute resources (Function, Edge Function, Docker Task) with the permissions and environment variables required to perform actions using cloud resources within the stack. This resource is on the receiving end of a service discovery wire originating from compute resources.

The following compute resources can use a service discovery wire to access an Object Store resource:

- Function

- Docker Task

Configurable Properties

CloudFormation Logical ID

The unique identifier used to reference this resource in the stack template. Defining a custom Logical ID is recommended, as it allows you to quickly identify a resource and any associated sub-resources when working with your stack in AWS, or anywhere outside of the Stackery Dashboard. As a project grows, it becomes useful in quickly spotting this resource in template.yaml or while viewing a stack in Template View mode.

The Logical ID of all sub-resources associated with this Object Store will be prefixed with this value.

The identifier you provide must only contain alphanumeric characters (A-Za-z0-9) and be unique within the stack.

Default Logical ID Example: ObjectStore2

IMPORTANT : AWS uses the Logical ID of each resource to coordinate and apply updates to the stack when deployed. On any update of a resource's logical ID (or any modification that results in one), CloudFormation will delete the currently deployed resource and create a new one in it's place when the updated stack is deployed.

Enable Website Hosting

When enabled, allows you to host a static website from this Object Store. An Index Document that is stored in the Object Store will need to be specified to act as the root of the website (default page);

Index Document

When Enable Website Hosting above is enabled, the HTML file specified here will be rendered when a user visits the Object Store's URL. This file will need to be inside of the Object Store. When navigating to a directory, the website will respond with the contents of the index document within the directory.

Block Public Access

When enabled, objects in the Object Store will not be uploadable or downloadable by unauthenticated, public users. It is recommended to block public access at the bucket level to be absolutely sure private documents are not exposed inadvertently.

Specifically, this setting ignores any public access grants provided in per-object ACLs or in the S3 Bucket Policy. Object ACLs and bucket policies granting public access may still be set, but they will have no effect.

This setting is implicitly set to false when Enable Website Hosting is enabled.

IAM Permissions

When connected by a service discovery wire (dashed wire), a Function or Docker Task will add the following IAM policy to its role and gain permission to access this resource.

S3CrudPolicy

Grants a Function or Docker Task permission to create, read, update, and delete objects from your Object Store.

In addition to the above policy, Function and Docker Task resources will be granted permission to perform the following actions:

s3:GetObjectAcl: Read the Access Control List specified on an object within the Object Stores3:PutObjectAcl: Modify the Access Control List of an object within the Object Store

The above Access Control List actions make it possible for you to create public objects (files). Public-accessible objects within an Object Store are typical for static websites hosted on Amazon S3.

Environment Variables

When connected by a service discovery wire (dashed wire), a Function or Docker Task will automatically populate and reference the following environment variables in order to interact with this resource.

BUCKET_NAME

The Logical ID of the Object Store resource.

Example: ObjectStore2

BUCKET_ARN

The Amazon Resource Name of the Amazon S3 Bucket.

Example: arn:aws:s3:::ObjectStore2

AWS SDK Code Example

Language-specific examples of AWS SDK calls using the environment variables discussed above.

Add a file to an Object Store

// Load AWS SDK and create a new S3 object

const AWS = require("aws-sdk");

const s3 = new AWS.S3();

const bucketName = process.env.BUCKET_NAME; // supplied by Function service-discovery wire

exports.handler = async message => {

const testObject = "Sample Text";

// Construct parameters for the putObject call

const params = {

Bucket: bucketName,

Body: testObject,

Key: 'Object Name',

ACL: 'public-read' // ** Not required. updates access control list of object

};

await s3.putObject(params).promise();

console.log('Object stored in ' + bucketName);

}

import boto3

import os

# Create an S3 client

s3 = boto3.client('s3')

bucket_name = os.environ['BUCKET_NAME'] # Supplied by Function service-discovery wire

def handler(message, context):

# Add a file to your Object Store

response = s3.put_object(

Bucket=bucket_name,

Key='Object Name',

Body='Sample Text',

ACL='public-read'

)

return response

Related AWS Documentation

AWS Documentation: AWS::S3:Bucket